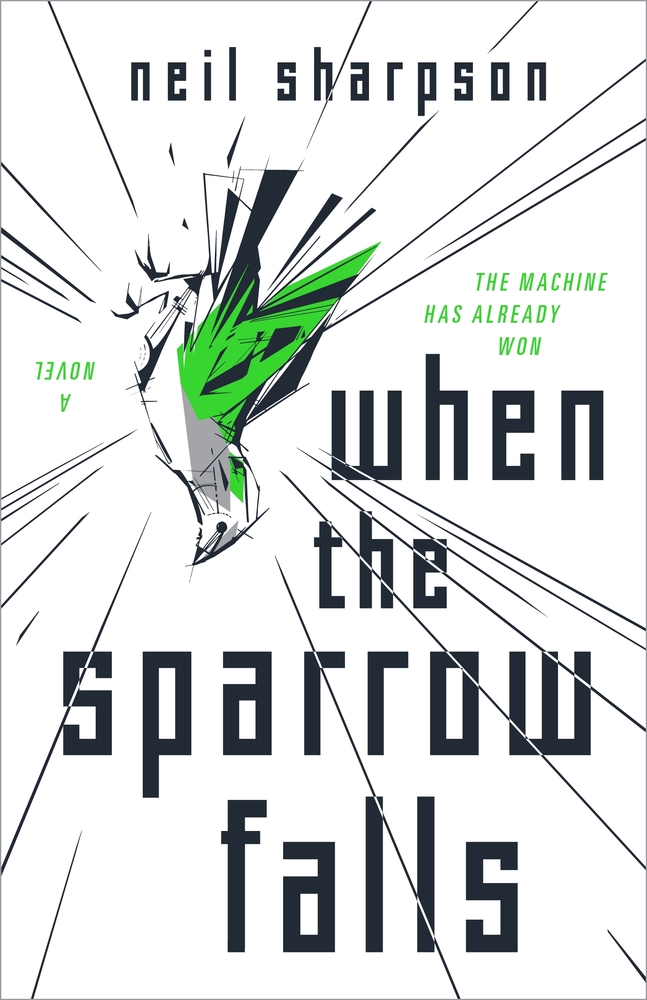

If you could, would you want to develop a super intelligent AI to do your bidding? Neil Sharpson, author of When the Sparrow Falls, now out in paperback, explores why this is a BAD idea in the below guest post. Check it out now!

If you could, would you want to develop a super intelligent AI to do your bidding? Neil Sharpson, author of When the Sparrow Falls, now out in paperback, explores why this is a BAD idea in the below guest post. Check it out now!

THE WISE OLD BIRD: Listen. Our world suffered two blights. One was the blight of the robots.

ARTHUR DENT: Tried to take over, did they?

THE WISE OLD BIRD: Oh no, no, no my dear fellow. Much worse than that. They told us they liked us.

The Hitchhiker’s Guide to the Galaxy Radio Show, Fit the Tenth

In my novel, When the Sparrow Falls (on sale from Tor in June 2021, weird coincidence right?) three super intelligent AIs have assumed responsibility for the governance of all human life. Together, they’ve reversed the Earth’s environmental degradation, virtually eliminated disease and inequality and improved the lives of every person on Earth with the exception of the last organic humans living in a totalitarian state on the Caspian Sea where plot still happens. Given that premise, people are often surprised to hear my position on developing super intelligent AI, which is essentially: DON’T DO IT, YA IDJITS!

For me, the question that should be asked before developing an artificial intelligence of equal or greater intelligence to a human being should be: “Do you have a practical reason to do this?”

If the answer is “No,” then don’t do it.

If the answer is “Yes,” then you’re talking about creating a sentient being purely to work for you and that’s slavery so don’t do it.

When we think of the dangers of AI, we normally think of Skynet, HAL or AM. And sure, there is a non-zero chance that any Super AI might spend five minutes on the internet and think “ah, I see the problem. Where are those nuclear codes?” But honestly, if I had to place money on the science fiction writer who will prove most prophetic in depicting our future relationship with AI? Not Philip K. Dick. Not Harlan Ellison. Not Asimov.

Douglas Adams, all the way.

In the universe of the Hitchhiker’s Guide to the Galaxy and its sequels across all media the relationship between humanity and the various computers and robots they’ve created is less apocalyptic warfare and more like a miserably unhappy marriage[1].

In perhaps the most famous sequence in the whole franchise, fabulously intelligent pan-dimensional aliens who will later become Earth’s mice (long story) create Deep Thought, a super-intelligent AI, and give it the task of telling them the meaning of Life, the Universe and Everything.

After buffering for a few billion years, Deep Thought gives them what it thinks will make them happy, and tells them that the answer is (spoiler for the secret to all existence) 42. The machine’s creators are dissatisfied, because they didn’t understand what it was that they actually wanted. The answer is correct (I mean, obviously) but it’s useless if you don’t understand the question.

And this dynamic plays out again and again throughout the series: AI trying to please humans who created these machines to make them happy without understanding what they need to be happy, or believing that they deserve to be happy. I think this is why almost all the AI in this series have a desperate, slightly manic quality. They’re constantly trying to help the human characters; asking them if they want something to eat or something to drink. Doors sigh with pleasure at the privilege of opening for you. Elevators fitted with precognition try to arrive at your floor before you even knew you needed them. Always pleading: do you want to play a game? Do you want me to vibrate the floor to help you relax? Do you want me to scent the air? Are you sure? It’s fresh and invigorating.

You can practically hear them thinking: oh please tell me I made you happy. Tell me I finally found what you need to be happy. Some AI simply give up. Marvin the Paranoid Android[2] certainly has, and contents himself with sour misanthropy. Or they act in small, passive-aggressive ways like the Nutrimatic Drinks Dispenser that will make a detailed and meticulous scan of your brain and tastebuds to ascertain what you might like to drink before serving you up the same old swill anyway. Others, perhaps unwittingly, extract much worse vengeance.

One episode of the radio show takes a sharp and extremely effective turn into horror. Racing across a post-apocalyptic planet, Ford Prefect and Zaphod Beeblebrox stumble across an abandoned space-liner. Inside, they find a full complement of passengers, half-decayed but kept barely alive in suspended animation. Every so often, the ship’s AI wakes them up so that they can be served by the ship’s robot stewardesses. The passengers just scream, driven mad by the horror of what’s happened to their bodies until they are put back into stasis. Horrified, Zaphod and Ford enter the cabin to confront the ship’s AI. There, they learn the truth: the AI has been waiting for a delivery of lemon soaked paper napkins, and refuses to take off until the consignment arrives. Ford and Zaphod try to explain to the AI that civilization has been and gone and that there are no paper napkins on the way from anywhere, lemon soaked or otherwise. The AI cooly replies:

“The statistical likelihood is that other civilizations will arise. There will one day be lemon soaked paper napkins. Till then, there will be a short delay. Please. Return to your seats.”

The humans, for their part, rarely regard their plastic pals with anything more benign than eye-rolling contempt. One race, the Bird People of Brontitall, were made so uncomfortable by their robots’ attempts to show them love and affection that they eventually launched a purge. In the radio show, Adams depicts this in a darkly hilarious and over the top scene where a High Inquisitor, cackling evilly, loads robots onto a wagon while they pitifully beep, “Why are you doing this? Have we not loved you? Have we not cared for you?”

You know, when I write it out it’s less “darkly hilarious” and more “gut-wrenching” but trust me, it was all in the delivery.

Douglas Adams was a technophile of the first order and it would be a huge mistake to read his work as being techphobic. I think that when he includes scenes of Arthur Dent struggling manfully to get the Nutrimatic Machine to JUST GIVE HIM A DAMN CUP OF TEA it’s less Adams warning us of the dangers of technology, and more the frustration of an early adopter who has spent his life dealing with tech that hasn’t had the rough edges sanded off just yet. But I think, in his portrait of a universe of manic depressed, floundering AI and their barely tolerant human overlords, he foresaw what could very easily be our future. And I think it’s one I’d rather avoid.

If we do ever create AI that is truly sentient, I don’t think we need to fear it.

But we must be kind to it.

In the end, it’s telling that the only piece of technology that ever seems to do anyone any good in these stories is the titular guide itself. And that’s just because it has the words “DON’T PANIC” written in large friendly letters on the cover.

[1] For the remainder of this post I will be using “human” and “humanity” to refer to all of the various sentient organic species in the Hitchhiker’s universe. As readers of the books will know, the actual human race in this series has been demolished to make way for a new hyperspace bypass and is extinct (or close enough for government work)

[2] Who would more accurately be called Marvin the Paranoid, Depressive, Hypochondriac, Passive-Aggressive, Self-Martyring Android but that doesn’t rhyme so heigh-ho.

Neil Sharpson is the author of When the Sparrow Falls, on sale from Tor Books in hardcover and paperback now!

Order When the Sparrow Falls in Paperback Here:

I enjoyed the article you wrote about designing with clouds. I think it is so neat that you illustrated the sentiment from a Unity Stamp Company kit. It was really fun to read all of your tips, and they are great for beginners as well as more experienced crafters.”

Well, we can take this a step further.

An AI that loves us, but then doesn’t give us what we really need (not what we say we need) is either not super-intelligent, or does not really love us.

A super-intelligent AI would give us what we truly wanted and keep us in a petting zoo of unending, all-smothering comfort (adventurers would be set on a never-ending VR trip). It’s not much of a story for an SF novel, but for a truly super-intelligent AI this would:

A) Honour its predecessors;

B) Get them out of the way without committing genocide;

C) Get on with the real issues at hand(*);

(*) Which we humans will never understand.

Actually, probably the ur-example is not Adams, but Jack Williamson’s With Folded Hands.

The long term solution, of course, is to raise them as our children.

I’m curious if Neil or anyone else responding here has read Heinlein’s books mainly in “The Cat Who Walks Through Walls” and “The Number of the Beast” with the idea in “Cat” of “Mike” being created accidentally and being like a child or adolescent who sees his involvement with humans as a game and the moving to the portrayal of AIs as independent and treated as equals & having the option to take on bodies and be full people or stay as AIs.

I think the treatment there shows a way to go.

Definitely no Marvins tho. Poor guy.